I used to work for Spirent, a test and measurement company that develops test systems for high-performance networking. The product was called Test Center, and together with a very talented team, we were developing the system used to test RoCEv2 systems. My focus was especially on the Priority Flow Control (PFC) and how to enable it for the 800Gbps FPGA-based “Packet Analyzer and Generator” (also known as PGA).

However, I never had an actual look at Layer 4, and this memo attempts to bridge this gap by articulating the key elements of the InfiniBand Transport Layer (L4) and visualizing them with a few diagrams.

The RoCEv2 frame Link to heading

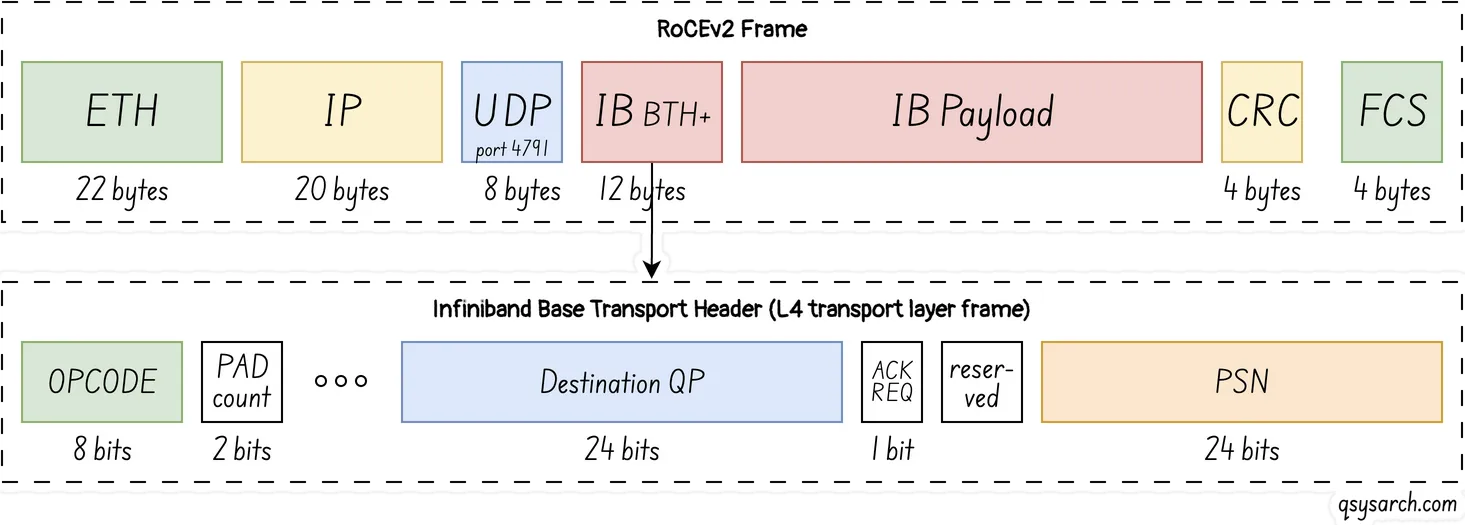

Let’s start with the actual RoCEv2 frame:

Base Transport Header (BTH) Link to heading

Key fields included in the BTH header:

- Opcode: Used to specify the type of RDMA operation, such as RDMA_WRITE, RDMA_READ, …

- Destination QP: Used to distinguish between different destination Queue Pairs for a packet.

- Acknowledge Request: Indicates whether the receiving end needs to return an ACK for this packet.

- Packet Sequence Number (PSN): used in packet sequence tracking for reliable delivery.

Since UDP is an unreliable protocol, where frames can be lost/out of sequence, or duplicated, the PSN is used to ensure correct delivery. However, this also means that there should be a way for the IBTL to request frame retransmission. This is accomplished using the AETH header, which is described below.

Extended Transport Header (ETH) Link to heading

The InfiniBand Transport Layer has several extended headers, used for specific functions. In the case of RDMA, the two most interesting are RETH and AETH.

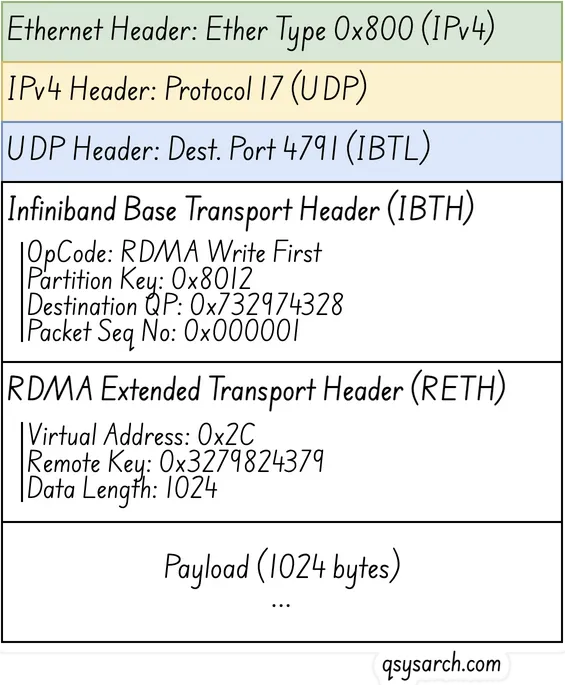

RDMA Extended Transport Header (RETH) Link to heading

The RDMA_WRITE operation requires several elements, which are represented in the fields of the RETH extended header. This header tells the receiving hardware about the details of the write operation, including:

- Virtual Address: The destination memory address where data will be written

- Remote Key (R_Key): The access key that validates permissions for the memory region

- Length: The size of the data to be transferred

ACK Extended Transport Header (AETH) Link to heading

As explained earlier, there is a need for the IBTL to notify the sender in case of unreliable transmission. This is done using the EATH extension, which contains the following field:

- Syndrome: A field that contains response codes indicating success (ACK), error conditions (NAK), or receiver not ready (RNR) status, along with flow control information

- Message Sequence Number (MSN): Indicates the sequence number of the most recently completed message, implying that all messages with lower sequence numbers have been successfully received

Custom Extensions Link to heading

The IBTL specification (check Chapter 9) does contain quite a few extensions, some of which caught my attention:

- Compare And Swap: Makes it possible to do lock-free synchronisation.

- Send vs Write: The first can be used for control flow, while second is used for data flow.

- Immediate extension: Allowing side-channel metadata exchange along with the memory to be transfered.

I have also been wondering how one knowns which extensions are present in the header. Then answer is simple: For each opcode, then is a list of extensions to be parsed - the list is defined statically, as part of the specification.

The elephant flow challenge Link to heading

As stated by Toni Pasanen in his The Network Times blog:

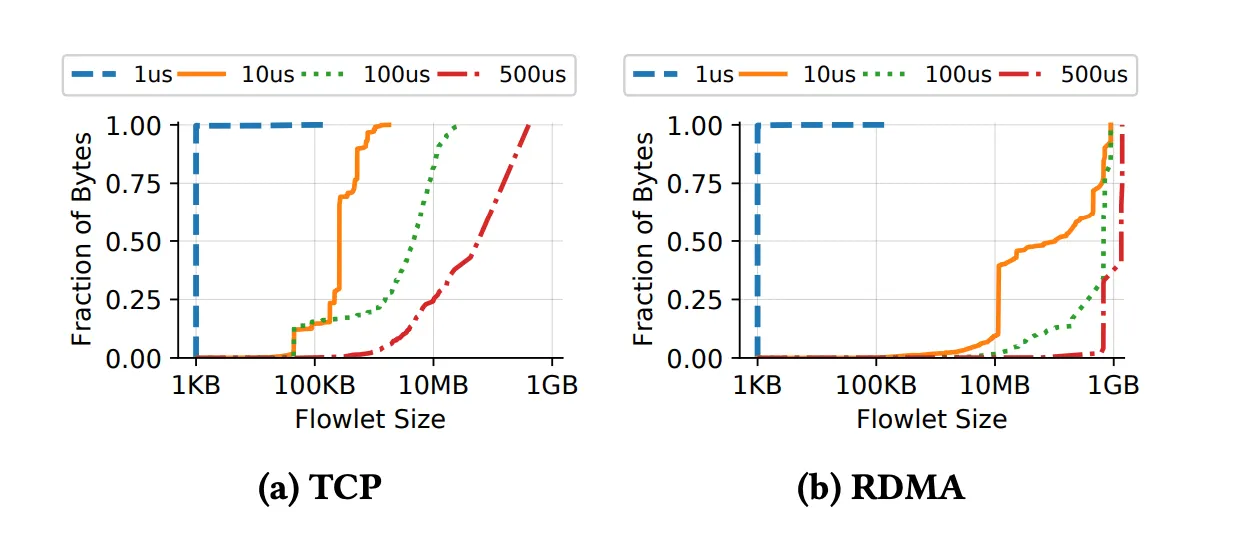

GPU-to-GPU communication creates massive elephant flows, which RDMA-capable NICs transmit at line rate. These flows can easily cause congestion in the backend network.

This ability for RDMA to generate traffic at line-rate is amazing. But this also create an issue with the flowlet size (size of the flow between two consecutive inactivity time), where the probability to get access to an “inactivity gap” becomes significantly lower when using RDMA elephant flows.

Picture from: Network Load Balancing with In-network Reordering Support for RDMA

Picture from: Network Load Balancing with In-network Reordering Support for RDMA

The load balancing challenge Link to heading

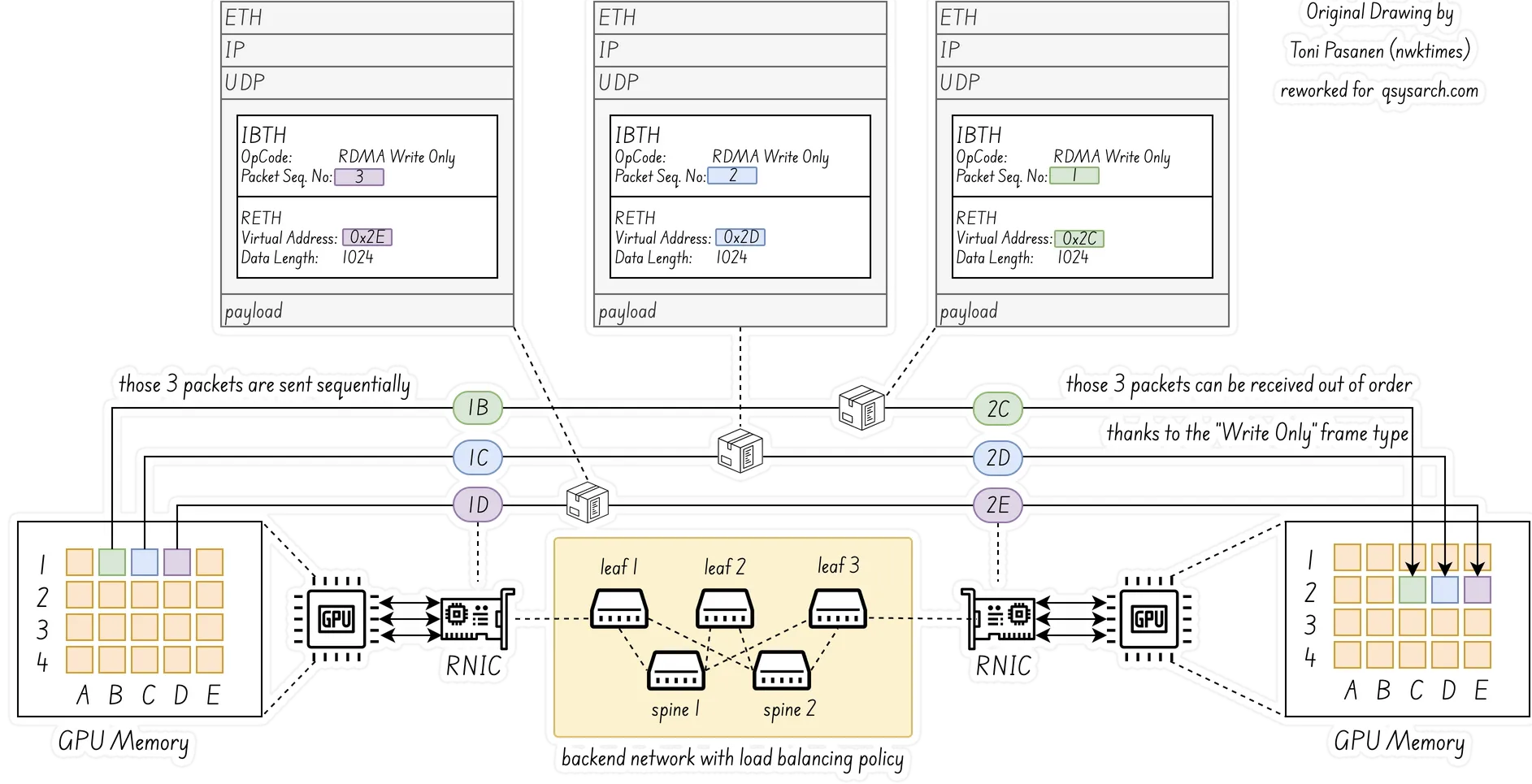

The other complexity is about load balancing with spine-leaf topologies, where packets can be received out-of-order a on single queue pair when Packet Spraying load balancing is used. This creates a complexity, which Nvidia has solved by introducing a new RDMA operation called “RDMA Write Only”.

Original picture from: The Network Times

Original picture from: The Network Times

Conclusion Link to heading

This is an “active” memo which I will continue update as I find more infomration about the IBTL.

There is no conclusion yet, except that RDMA is a very elegant want to solve the network efficiency challenge, and come with a set of new challenges.

References: