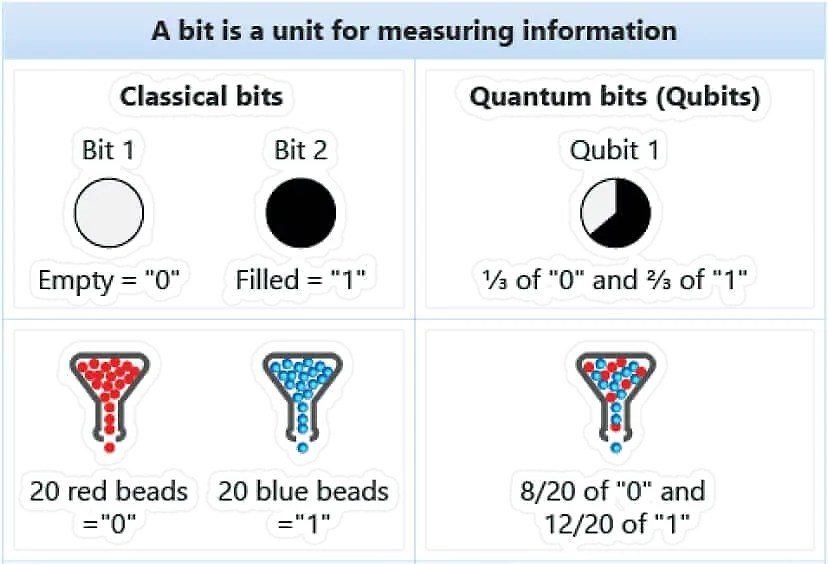

What is a Qubit; (Credits: microsoft)

What is a Qubit; (Credits: microsoft)It is commonly acknowledged that the quantum computing industry is still in the very early stages, with hopes of reaching useful milestones within 5 to 10 years. This Sunday morning memo is a brainstorming session about the potential future architecture of quantum computing machines.

Before you read on, keep in mind that what follows is just a look at possible futures, not a prediction of what will or should happen. As Steve Jobs said,

You can’t connect the dots looking forward. You can only connect them looking backward. So you have to trust that the dots will somehow connect in your future. You have to trust in something — your gut, destiny, life, karma, whatever. This approach has never let me down, and it has made all the difference in my life.

Back to the Future Evolutions Link to heading

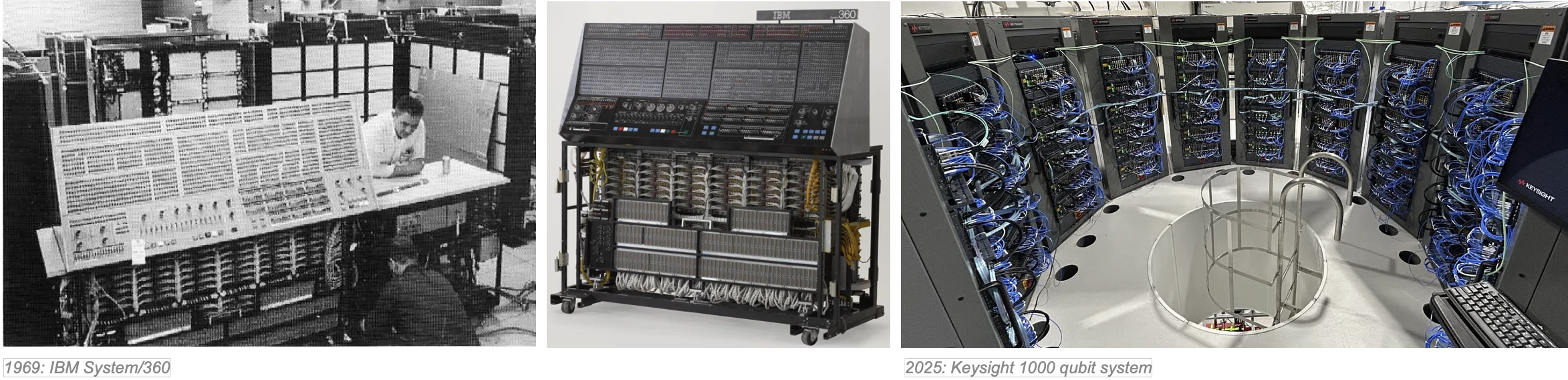

When thinking about the 70s, when large and bulky mainframes were available, one can immediately relate to how computing systems look today. For example, this is the comparison of the IBM 360/91 from 1966, vs Keysight 1000-qubit system deployed at G-QuAT in 2025.

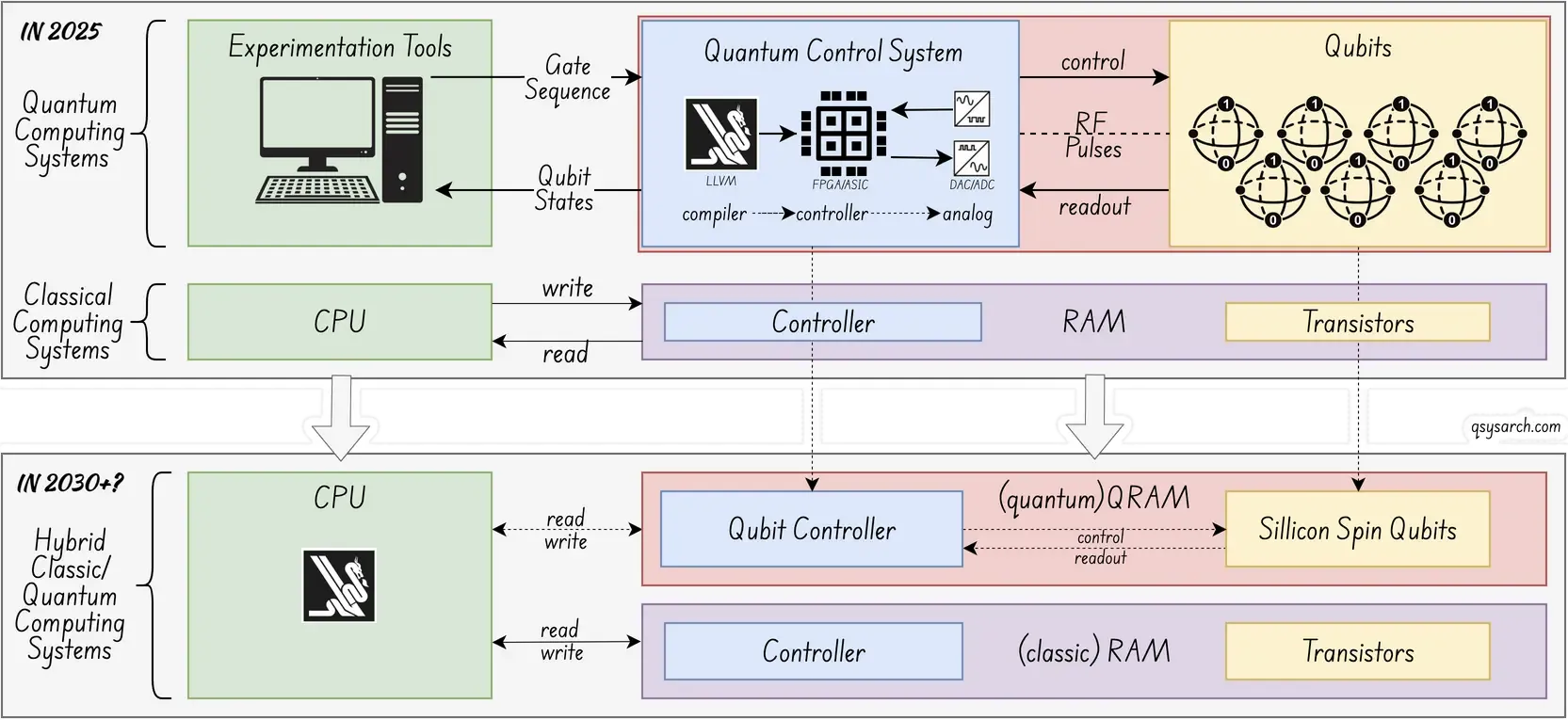

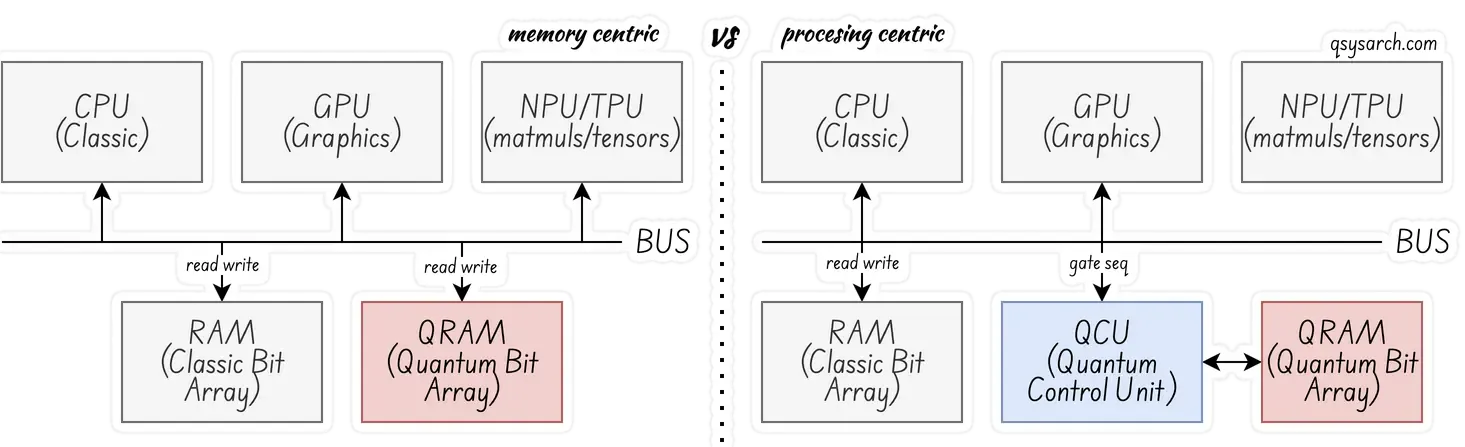

So, what about allowing ourselves to think about how we would like to see the future quantum computing system? The diagram below illustrates my take on it. The idea is simply to compare the current memory chips, which are composed of complex bit storage and a controller to access those bits, to how the actual quantum control systems are deployed today. What may be controversial is that, rather than identifying the qubit control as a processing unit, it is now compared to a memory unit.

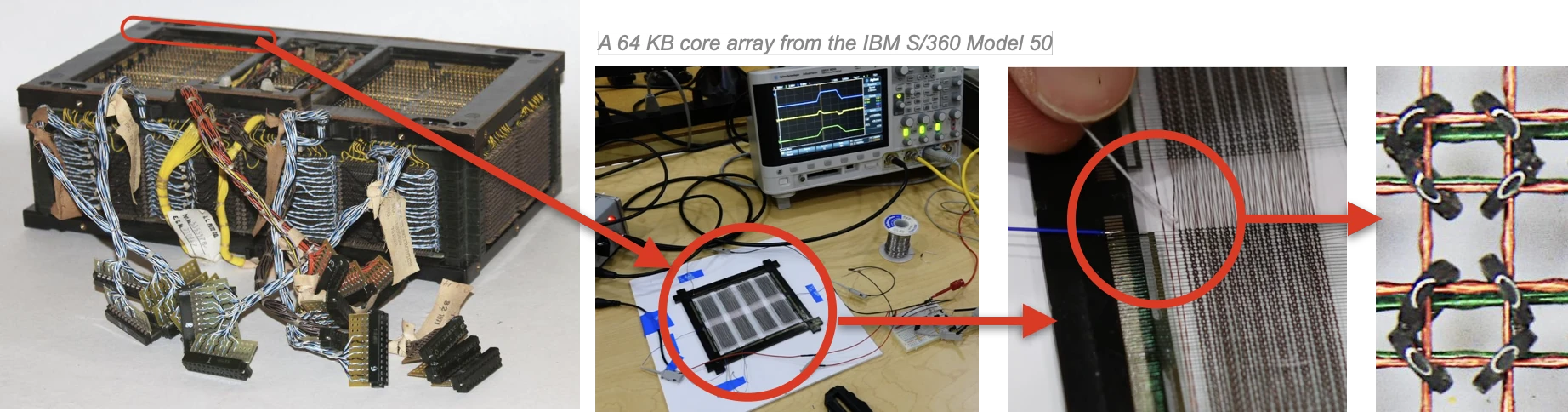

This is where I stumbled on Ken Shirriff’s blog about the memory in the IBM S/360. The content of the blog and video is absolutely fantastic - I could never have imagined that memory technology had evolved so much. And looking at how one uses pulses to “drive” the core memory very much reminds me of the similar techniques used to control and readout qubit “entities”, which I will then refer to as “qubit memories”.

There is, of course, a very long way to go before one could think about having an integrated silicon die consisting of both silicon qubits (or similar modalities) and control electronics. At the current stage of development, the qubits need to be operated at cryogenic temperatures to prevent environmental noise—yes, the qubits are “sensitive” entities. Furthermore, the control electronics are themselves very noisy and generate heat, so they do not play well with sitting next to the qubit.

Once the noisy challenge has been addressed, likely with breakthrough in material science (think ferroelectric memories or terahertz transitors), the industry will then have to step toward having integrated ICs, with advanced packaging, just like what is done with for silicon photonics. I have no pretention to know how this will happen, and even less when it will happen, but if looking back at the evolution of the mainframes - one can be confident it will happen. And once this happens, it opens the door to every single piece of electronic equipment being bundled with a Quantum Computing element.

Note[2025-10-28]: There is an implicit assumption in the above diagram that the qubit is based on silicon-type modality, but this does not have to be the case. For example, optically addressable qubit modalities do not have a cryogenic challenge, but rather a scalability and miniaturization challenge. And one can definitely expect breakthroughs to also happen in those modalities too - think optically controlled magnetoresistive RAM.

What makes a QRAM a “quantum element”? Link to heading

This digression would not be interesting without answering the key question: What makes the Quantum RAM a “quantum element” of interrest? Standard RAM, whether it is Static RAM (SRAM), Dynamic RAM (DRAM), or Synchronous Dynamic RAM (SDRAM), all work on a very basic principle: reading and writing bits to an array addressed by a linear address space.

Let’s assume that the qubits can also be addressed by a linear address space. In this case, what makes this array more interesting than a simple “boolean bit” array? Those are a few of the considerations that one needs to take into account when thinking about “quantum bits”:

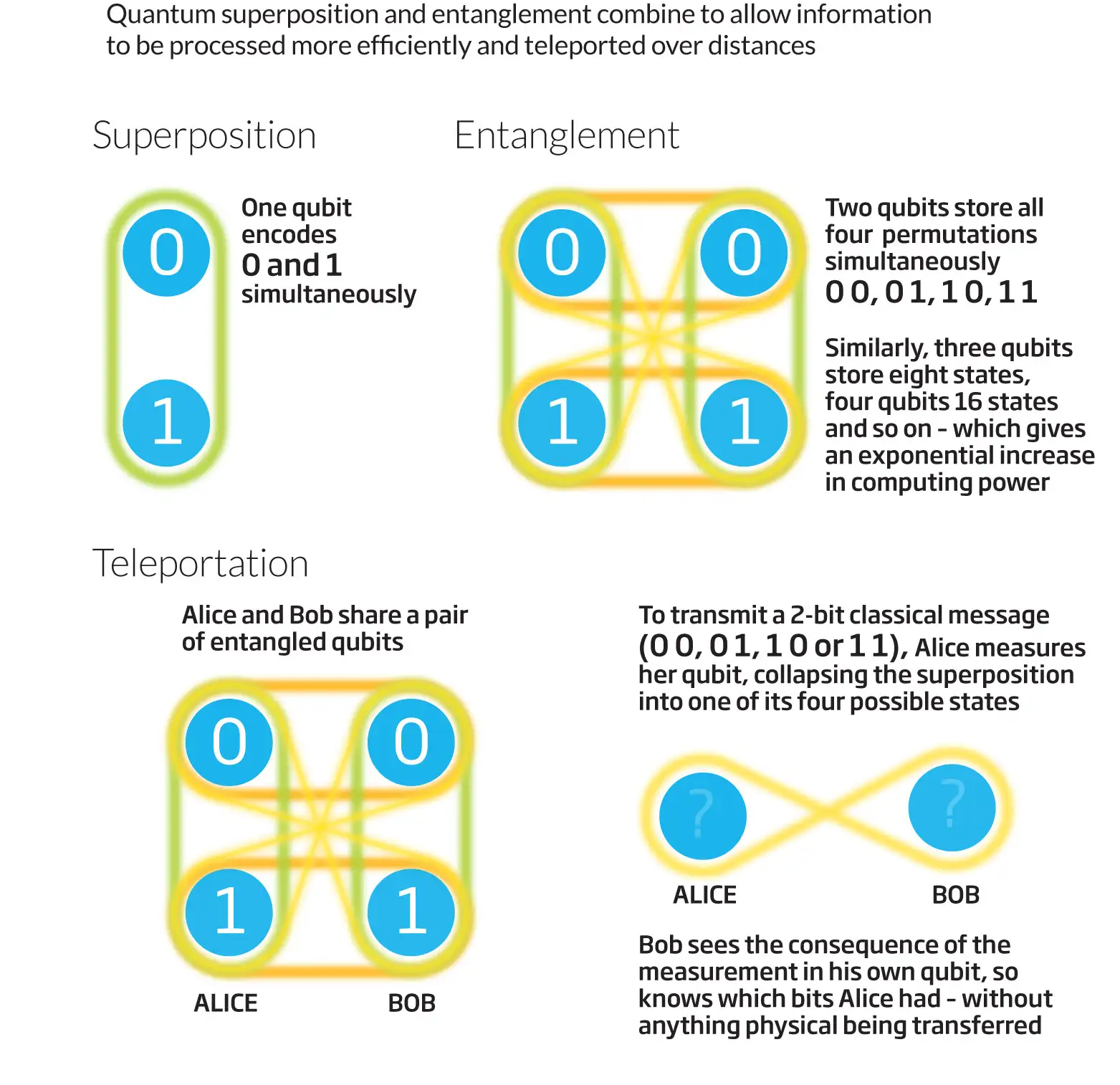

Credits: Quantum information: The promise

Credits: Quantum information: The promiseEntanglement: Multiple qubits can exhibit quantum entanglement. Entangled qubits always correlate with each other to form a single system. Even when they’re infinitely far apart, measuring the state of one of the qubits allows us to know the state of the other, without needing to measure it directly.

Superposition: Superposition enables quantum algorithms to utilize other quantum mechanical phenomena, such as interference and entanglement. Together, superposition, interference, and entanglement create computing power that can solve problems exponentially faster than classical computers.

It is commonly known that the only truly Quantum concept is entanglement. Superposition is only a way to express that a system can be in two or more states, which are known states, but not observed. And once observed, the system “collapses” into a single, definite state. There is nothing so special in superposition; what is special is the fact that one can use entanglement to “observe” without “measuring”, thus maintaing the quantum propertie of the qubits.

So, what kind of interface would this QRAM need to expose on the “superposed states qubits” to qualify as a “Quantum RAM”?

- means to control each qubit individually with single-qubit gates, with “reset” as a pseudo-gate.

- means to control multi-qubit with conditional gates, using entanglement under the hood.

- means to readout each qubit, by reducing or “collapsing" the qubit into a single, definite state.

I need to admit that this is an over-simplified and naïve perspective that my physicist colleagues would go to war against - and I agree that one need to simplify its equation with the help of better abstractions; I also need to admit that I really do not like the idea of calling a “quantum bit array” a “quantum memory”, but the name “QRAM” is so appealing, as well as a powerfull metaphor.

Processor vs Memory Link to heading

One of the dilemmas about the “memory metaphor” is whether it is actually acceptable to “simplify” the Quantum Control System and let it become an ancillary part of the memory. Or whether there needs to be a QCU (Quantum Control Unit) that, like a GPU (Graphic Processing Unit), operates on the quantum bits, in which case the emphasis is on the execution of a gate sequence.

Of course, one may say that this is the same - in the first case, the processor is no more than the controller within the memory array. But from a system architecture perspective, what is important is to emphasize the interface being exposed: in the case of memory, the actual gate sequencer is the CPU/GPU, and the interaction with the QRAM is based on Gates (write) and Readout (reads). In the case of a quantum processor, the sequence is in the QCU, and the interaction is based on gate sequences. For the first to work, the CPU/GPU would have to handle some level of cosynchronicity for multi-qubit gates.

Scaling Up Link to heading

One of the many challenges with Quantum Bits is the scale, both in terms of quantity and reliability. The quantity is the ability to package more qubits in the same die, while the reliability refers to the ability to create qubits with better characterization. I purposely did not mention the decoherence time, as longer decoherence may not always be beneficial; I’ll write about this in a future memo.

Back to reliability, it is worth noting that nowadays RAMs are bundled with internal ECC, or error-correcting code, within the controllers; And QRAM could also benefit from having QEC, or Quantum Error Correction, bundled with the controller. This would create “Reliable Quantum Memories”.

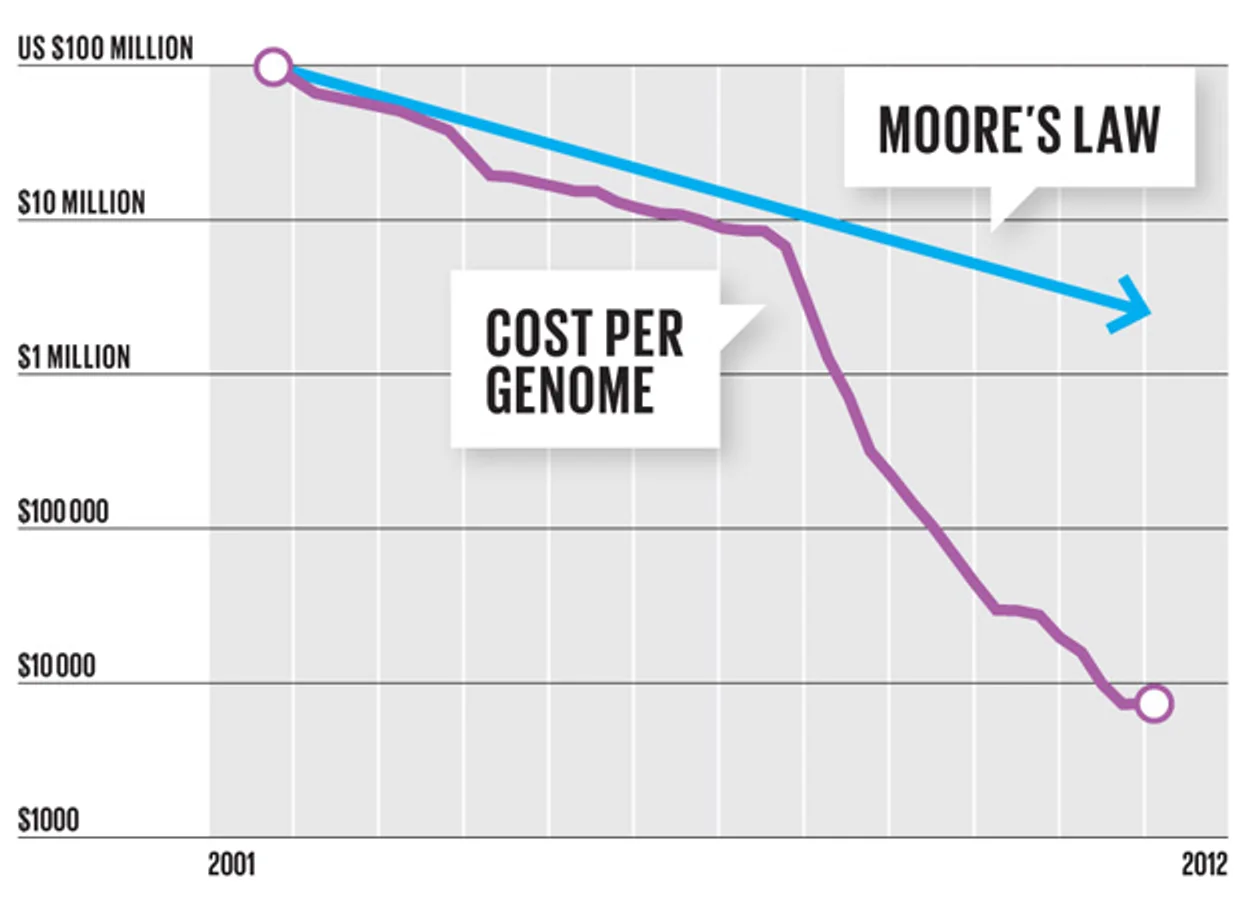

What I do like in the abstraction of the quantum memory unit is that one can easilly relate to the evolution that has happened in the DNA sequencing industry: back in the days, DNA sequencers used to be very expensive, because they were aiming at perfect matching of the sequence - one at a time, and it indeed took 32 years to sequence the full human genome. But with the introduction of NGS, or Next Generation Sequencing, it only took 1 day at a much lower price. The reason: the massive parallel approach (Image Credits: IEEE Spectum).

So, maybe we could consider that, once we are able to create QRAM ICs, the key to reliability will be enabling massive redundancy, by having thousands of copackage QRAMs, all doing the same, rather than trying to get a single logical-qubit-based QRAM to be perfect, as QEC is now aiming for?

Conclusion Link to heading

Voila, I will come back to this memo in 5 years and see how much of it will have become true, if any!

References Link to heading

- A look at IBM S/360 core memory: In the 1960s, 128 kilobytes weighed 610 pounds

- Ferroelectric Memory Devices: How to store the information of the future?

- You Can Connect the Dots Only by Looking Backward

- Magnonic processors could revolutionise computing

- optical control for magnetoresistive RAM

- Helicity-dependent optical control of the magnetization state emerging from the Landau-Lifshitz-Gilbert equation

DrawIO diagrams used in this memo: